Big Data Technologies encompass the tools, frameworks, and techniques used to handle and analyze large amounts of structured and unstructured data. Big Data technologies are intended to deal with data that is too large, complex, and diverse for traditional data processing tools to manage efficiently.

Big Data technologies are distinguished by their capacity to process and analyze large amounts of data. They are intended to support distributed computing, which means they can operate on computer clusters to process data in parallel. This allows organizations to cost-effectively and efficiently store, process, and analyze vast amounts of data.

Distributed file systems, such as Hadoop Distributed File System (HDFS), and data processing algorithms, such as Apache Spark, are essential Big Data technology components. Big Data technologies include Big Data-optimized systems, such as NoSQL databases, and real-time data processing tools like Apache Kafka and Apache Flink.

Finance, healthcare, retail, and manufacturing are just a few sectors that use Big Data technology. Big Data analytics insights can help organizations improve operational efficiency, make better business decisions, and obtain a competitive advantage.

Types of Big Data Technologies

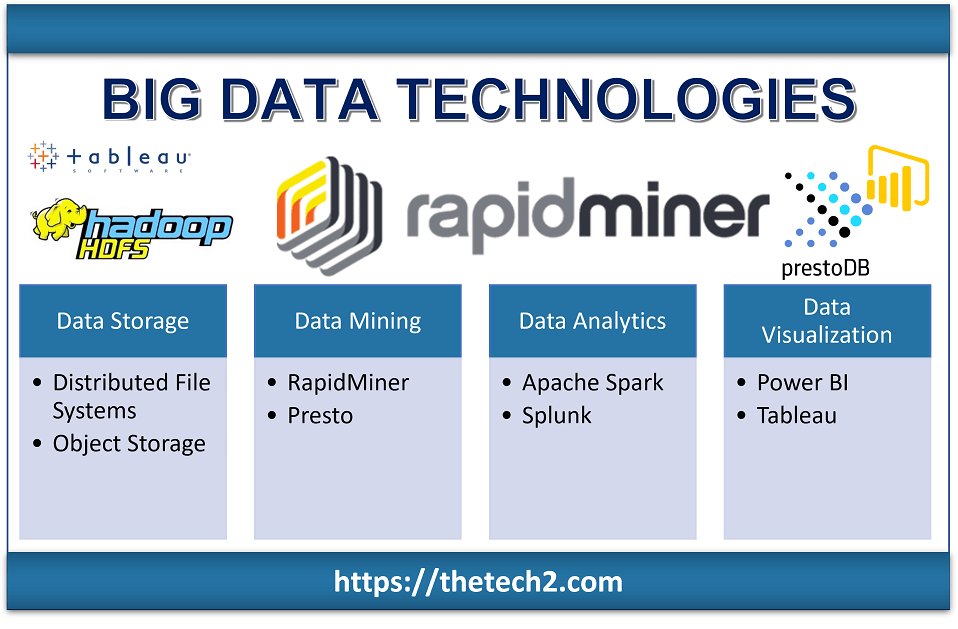

Data storage, mining, analytics, and visualization are the primary types of big data technologies. Each of these is associated with specific tools, and depending on the type of big data technology needed, you’ll want to select the right tool for your business needs.

Data Storage

Big Data technology relies heavily on data storage. As the volume of data generated and collected by organizations increases, storing and managing that data becomes increasingly difficult. Traditional data storage technologies like relational databases are not built to manage Big Data’s scale and complexity.

Big Data storage technologies are intended for storing and managing vast amounts of data across distributed systems. They are designed for high availability, scalability, and fault tolerance, allowing organizations to reliably and efficiently store and access massive quantities of data.

Several Big Data storage technologies are available today, all intended to meet the specific requirements of Big Data workloads. Here are a couple of examples:

Distributed file systems

Distributed file systems are a form of Big Data storage technology that uses a distributed network of computers to store large amounts of data. A distributed file system aims to provide high availability, fault tolerance, and scalability while allowing efficient access to data from numerous network nodes.

The Hadoop Distributed File System (HDFS), used by many organizations for storing and processing large amounts of data, is one of the most famous distributed file systems. HDFS is an open-source distributed file system and part of the Apache Hadoop initiative. It is intended to operate on commodity hardware and offers high-throughput access to large datasets. You can learn more about HDFS on Wikipedia.

HDFS works by dividing large files into smaller blocks and distributing these blocks across numerous cluster nodes. The file system replicates these blocks to ensure high availability and fault tolerance, which means that data can still be viewed from other nodes in the cluster if one node fails.

Google File System (GFS) and GlusterFS are two other instances of distributed file systems. GFS is a Google-developed proprietary file system for storing big datasets across a distributed network of computers. GlusterFS is an open-source distributed file system providing flexible, high-performance data access.

Distributed file systems are critical to Big Data technology because they allow organizations to effectively store and process large amounts of data while maintaining high availability and fault tolerance. Organizations can improve their data processing and analytics capabilities by utilizing distributed file systems, allowing them to gain valuable insights that can drive better decision-making and business outcomes.

Object Storage

Object storage is a Big Data storage technology that allows for the highly scalable and cost-effective storage of large quantities of unstructured data such as documents, images, and videos. Object storage is frequently used to keep data that does not need frequent access but must be kept for long periods.

Data is stored in object storage as objects, data units that include the data, metadata, and a unique identifier. Each object is stored in a flat address space, simplifying data access and retrieval from the system.

Object storage systems are designed to grow and, last, store petabytes or even exabytes of data across a distributed network of servers. APIs are typically used to access object storage, making integration with other applications and services accessible.

Some of the most popular object storage options are Amazon S3, Google Cloud Storage, and Microsoft Azure Blob Storage. These cloud-based storage services are highly scalable and long-lasting and can be accessed anywhere with an internet connection.

Object storage is essential to Big Data technology because it enables organizations to store and handle large amounts of unstructured data while lowering storage costs. Organizations can use object storage to improve their data processing and analytics capabilities, enabling them to gain valuable insights to drive better decision-making and business outcomes.

NoSQL Databases

NoSQL databases are a type of Big Data technology that can handle vast amounts of unstructured or semi-structured data, such as social media posts, sensor data, and log files. Unlike traditional relational databases, which store data in tables with set schemas, NoSQL databases use a flexible schema model that allows for more dynamic data structures.

NoSQL databases are designed to be distributed and scalable, making them perfect for Big Data workloads. They’re commonly used in applications that require a lot of scalabilities, availability, and speed, like e-commerce, gaming, and social media.

MongoDB, Cassandra, and HBase are some of the most famous NoSQL databases. MongoDB is a document-oriented database that is built for scale and flexibility. Cassandra is a distributed database with high availability and linear scalability, perfect for dealing with vast amounts of data. HBase is a column-oriented database designed for read-intensive workloads and offers low-latency data access.

NoSQL databases are essential to Big Data technology because they allow organizations to effectively store and process large amounts of unstructured data while providing high scalability and performance. Organizations can improve their data processing and analytics capabilities by utilizing NoSQL databases, allowing them to gain valuable insights that can drive better decision-making and business outcomes.

In-Memory Databases

In-memory databases (IMDB) are a Big Data technology that stores data in computer memory rather than on disk or other long-term storage devices. This provides much faster data access because data can be read and written straight from memory rather than disk.

High-frequency trading, real-time analytics, and gaming are all examples of apps that use IMDBs. They are also helpful in dealing with large amounts of data because they can be distributed across numerous servers for high scalability.

Redis, Memcached, and Apache Ignite are three prominent in-memory databases. Redis is a key-value store that can be used for caching, messaging, and real-time statistics. Memcached is a distributed memory caching technology frequently used to accelerate dynamic web apps. Apache Ignite is an in-memory data grid that offers excellent performance and scalability for distributed applications.

In-memory databases are an essential component of Big Data technology because they allow organizations to process and analyze data in real time, providing valuable insights that can be used to drive business decisions. Organizations can be competitive in today’s fast-paced business environment by leveraging in-memory databases.

Cloud Storage

Cloud storage is a Big Data technology that enables users to keep and retrieve data over the internet via remote servers hosted by a third-party provider. Cloud storage is usually offered as a service, with users paying monthly or annually for the amount of storage they use.

Cloud storage is intended to be highly scalable and flexible, enabling users to store and access data from any location with an internet connection. It is beneficial for storing significant amounts of data that would be too costly or difficult to store on-premise.

Amazon Web Services (AWS), Google Cloud Storage, and Microsoft Azure are some of the most prominent cloud storage providers. These cloud service providers provide a variety of storage choices, such as object storage, block storage, and file storage, with different levels of performance, durability, and cost.

Cloud storage is essential to Big Data technology because it allows organizations to effectively store and manage large volumes of data while providing high scalability and availability. Organizations can improve their data processing and analytics capabilities by utilizing cloud storage, allowing them to gain valuable insights that can drive better decision-making and business outcomes.

Data Mining

Data mining, a key component of Big Data technology, is finding patterns and insights from large amounts of data. Data mining is analyzing large datasets using statistical and machine learning algorithms to find patterns, trends, and insights that can be used to drive business decisions.

Data mining is beneficial for processing and analyzing unstructured data, such as text, images, and video, which can be challenging to study using conventional data analysis techniques. It is also helpful for detecting hidden relationships and patterns in large datasets that are only sometimes obvious.

Data mining methods are used in many applications, such as customer relationship management, fraud detection, and predictive analytics. R, Python, and Apache Mahout are some prominent data mining tools.

Data mining is a critical component of Big Data technology because it allows organizations to obtain insights and spot patterns in massive amounts of data, which can then be used to improve business operations and decision-making. Organizations can better understand their consumers, find growth opportunities, and optimize business processes by utilizing data mining techniques, resulting in improved outcomes and a competitive advantage in the marketplace.

RapidMiner

RapidMiner is a popular open-source data science platform that offers a broad range of data mining, machine learning, and predictive analytics tools and features. RapidMiner is intended to make it simple for users of different technical skill levels to work with large datasets and create predictive models.

RapidMiner’s drag-and-drop interface lets users easily import data from databases, spreadsheets, and web services. The data can be preprocessed and transformed using various pre-built tools and algorithms. Predictive models can be constructed using various machine-learning techniques such as regression, classification, and clustering.

RapidMiner also includes a suite of visualization and reporting tools that enable users to examine and analyze their data and convey their findings to stakeholders.

RapidMiner is well-known in various sectors, including healthcare, finance, and retail, for its ease of use, flexibility, and scalability. RapidMiner provides a commercial version with extra features and support besides the open-source version.

Presto

Presto is a distributed SQL query engine that can handle vast amounts of data from various sources. Presto is an open-source Big Data technology developed by Facebook. It enables users to query data using standard SQL syntax, making it accessible to a broad range of SQL experts.

Presto is intended to be highly scalable and adaptable, with the ability to process data from various sources such as Hadoop Distributed File System (HDFS), Amazon S3, and various relational databases. Presto employs a distributed architecture, enabling users to perform queries across numerous servers in parallel, significantly reducing query times for large datasets.

Presto is also well-known for its ability to manage complex queries involving joining numerous datasets, data filtering, and result aggregation. As a result, it is an effective instrument for data analytics and business intelligence applications.

Data Analytics

Technologies are used in big data analytics to clean and transform data into information that can be used to drive business choices. Following data mining, users execute algorithms, models, and other tasks with Apache Spark and Splunk tools.

Data Visualization

Big data tools can be used to generate visually stunning data visualizations. Data visualization is a skill that is useful in data-oriented roles for presenting suggestions to stakeholders for business profitability and operations. Power BI, Tableau, and Looker are examples of data visualization technologies.

You may be interested in more Big Data topics.